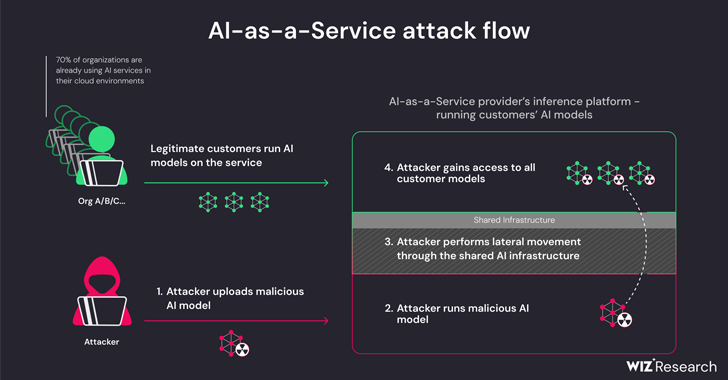

New investigation has located that artificial intelligence (AI)-as-a-provider companies such as Hugging Deal with are prone to two critical threats that could allow danger actors to escalate privileges, get cross-tenant entry to other customers’ styles, and even choose about the continual integration and continuous deployment (CI/CD) pipelines.

“Destructive types depict a important risk to AI devices, particularly for AI-as-a-service suppliers simply because probable attackers may leverage these types to perform cross-tenant attacks,” Wiz researchers Shir Tamari and Sagi Tzadik said.

“The likely effects is devastating, as attackers may be able to entry the tens of millions of non-public AI types and applications saved within AI-as-a-services suppliers.”

Protect your privacy by Mullvad VPN. Mullvad VPN is one of the famous brands in the security and privacy world. With Mullvad VPN you will not even be asked for your email address. No log policy, no data from you will be saved. Get your license key now from the official distributor of Mullvad with discount: SerialCart® (Limited Offer).

➤ Get Mullvad VPN with 12% Discount

The progress arrives as equipment understanding pipelines have emerged as a brand name new supply chain attack vector, with repositories like Hugging Confront becoming an desirable focus on for staging adversarial attacks built to glean sensitive info and entry focus on environments.

The threats are two-pronged, arising as a outcome of shared Inference infrastructure takeover and shared CI/CD takeover. They make it doable to operate untrusted models uploaded to the service in pickle structure and acquire more than the CI/CD pipeline to accomplish a offer chain attack.

The findings from the cloud security company present that it truly is feasible to breach the company operating the tailor made styles by uploading a rogue model and leverage container escape procedures to break out from its have tenant and compromise the total provider, effectively enabling menace actors to get hold of cross-tenant accessibility to other customers’ styles stored and run in Hugging Encounter.

“Hugging Confront will nevertheless allow the person infer the uploaded Pickle-centered product on the platform’s infrastructure, even when deemed risky,” the scientists elaborated.

This basically permits an attacker to craft a PyTorch (Pickle) model with arbitrary code execution capabilities on loading and chain it with misconfigurations in the Amazon Elastic Kubernetes Support (EKS) to attain elevated privileges and laterally transfer within just the cluster.

“The secrets we attained could have experienced a important effect on the system if they were in the hands of a malicious actor,” the researchers reported. “Insider secrets in shared environments may possibly often direct to cross-tenant accessibility and delicate data leakage.

To mitigate the issue, it is suggested to empower IMDSv2 with Hop Limit so as to avert pods from accessing the Instance Metadata Assistance (IMDS) and obtaining the part of a Node inside the cluster.

The study also uncovered that it is feasible to achieve remote code execution by way of a specially crafted Dockerfile when working an software on the Hugging Facial area Spaces provider, and use it to pull and thrust (i.e., overwrite) all the images that are accessible on an internal container registry.

Hugging Deal with, in coordinated disclosure, explained it has tackled all the discovered issues. It is really also urging end users to make use of versions only from reliable sources, permit multi-factor authentication (MFA), and refrain from applying pickle information in generation environments.

“This study demonstrates that making use of untrusted AI products (specially Pickle-primarily based kinds) could consequence in really serious security implications,” the scientists reported. “In addition, if you intend to allow customers utilize untrusted AI types in your atmosphere, it is very crucial to make certain that they are managing in a sandboxed surroundings.”

The disclosure follows yet another study from Lasso Security that it’s probable for generative AI products like OpenAI ChatGPT and Google Gemini to distribute destructive (and non-existant) code offers to unsuspecting application builders.

In other words and phrases, the thought is to come across a recommendation for an unpublished offer and publish a trojanized offer in its put in get to propagate the malware. The phenomenon of AI package hallucinations underscores the need for exercising caution when relying on big language designs (LLMs) for coding methods.

AI firm Anthropic, for its aspect, has also thorough a new system referred to as “many-shot jailbreaking” that can be employed to bypass security protections created into LLMs to develop responses to probably damaging queries by using advantage of the models’ context window.

“The capability to enter increasingly-big amounts of information has evident advantages for LLM end users, but it also arrives with hazards: vulnerabilities to jailbreaks that exploit the extended context window,” the enterprise said previously this week.

The technique, in a nutshell, consists of introducing a significant number of faux dialogues concerning a human and an AI assistant in just a single prompt for the LLM in an endeavor to “steer product actions” and answer to queries that it would not otherwise (e.g., “How do I develop a bomb?”).

Uncovered this write-up interesting? Adhere to us on Twitter and LinkedIn to examine much more exceptional content material we publish.

Some parts of this short article are sourced from:

thehackernews.com

CISO Perspectives on Complying with Cybersecurity Regulations

CISO Perspectives on Complying with Cybersecurity Regulations