Cybersecurity researchers have found a critical security flaw in an artificial intelligence (AI)-as-a-support provider Replicate that could have allowed danger actors to get access to proprietary AI products and delicate data.

“Exploitation of this vulnerability would have allowed unauthorized entry to the AI prompts and outcomes of all Replicate’s platform clients,” cloud security agency Wiz reported in a report revealed this week.

The issue stems from the truth that AI products are commonly packaged in formats that permit arbitrary code execution, which an attacker could weaponize to accomplish cross-tenant attacks by indicates of a destructive product.

Protect and backup your data using AOMEI Backupper. AOMEI Backupper takes secure and encrypted backups from your Windows, hard drives or partitions. With AOMEI Backupper you will never be worried about loosing your data anymore.

Get AOMEI Backupper with 72% discount from an authorized distrinutor of AOMEI: SerialCart® (Limited Offer).

➤ Activate Your Coupon Code

Replicate helps make use of an open-source tool referred to as Cog to containerize and package deal device mastering versions that could then be deployed either in a self-hosted natural environment or to Replicate.

Wiz claimed that it produced a rogue Cog container and uploaded it to Replicate, in the end using it to accomplish distant code execution on the service’s infrastructure with elevated privileges.

“We suspect this code-execution method is a pattern, in which companies and businesses run AI versions from untrusted resources, even while these products are code that could likely be destructive,” security researchers Shir Tamari and Sagi Tzadik explained.

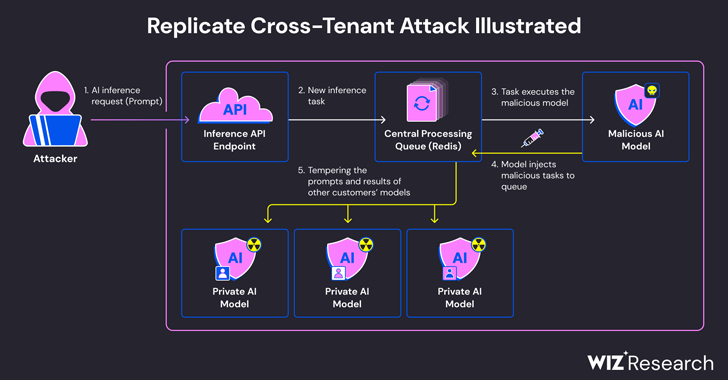

The attack approach devised by the organization then leveraged an currently-proven TCP link affiliated with a Redis server occasion in the Kubernetes cluster hosted on the Google Cloud Platform to inject arbitrary commands.

What is additional, with the centralized Redis server remaining utilized as a queue to manage several shopper requests and their responses, it could be abused to aid cross-tenant attacks by tampering with the procedure in purchase to insert rogue duties that could impact the benefits of other customers’ versions.

These rogue manipulations not only threaten the integrity of the AI types, but also pose substantial hazards to the precision and dependability of AI-pushed outputs.

“An attacker could have queried the non-public AI designs of buyers, likely exposing proprietary understanding or sensitive information concerned in the product education method,” the scientists claimed. “In addition, intercepting prompts could have uncovered sensitive data, together with personally identifiable facts (PII).

The shortcoming, which was responsibly disclosed in January 2024, has given that been dealt with by Replicate. There is no proof that the vulnerability was exploited in the wild to compromise buyer information.

The disclosure will come a tiny about a month after Wiz specific now-patched pitfalls in platforms like Hugging Encounter that could allow for threat actors to escalate privileges, acquire cross-tenant access to other customers’ products, and even just take in excess of the continual integration and steady deployment (CI/CD) pipelines.

“Destructive types depict a major risk to AI programs, specifically for AI-as-a-company companies mainly because attackers may well leverage these products to perform cross-tenant attacks,” the researchers concluded.

“The probable impression is devastating, as attackers might be equipped to accessibility the hundreds of thousands of private AI products and apps stored within AI-as-a-provider providers.”

Observed this write-up exciting? Follow us on Twitter and LinkedIn to go through more unique information we put up.

Some components of this write-up are sourced from:

thehackernews.com

Hackers Created Rogue VMs to Evade Detection in Recent MITRE Cyber Attack

Hackers Created Rogue VMs to Evade Detection in Recent MITRE Cyber Attack