Security and IT groups are routinely forced to adopt application prior to absolutely comprehension the security challenges. And AI equipment are no exception.

Staff members and enterprise leaders alike are flocking to generative AI program and very similar programs, often unaware of the important SaaS security vulnerabilities they are introducing into the business. A February 2023 generative AI study of 1,000 executives exposed that 49% of respondents use ChatGPT now, and 30% plan to faucet into the ubiquitous generative AI software quickly. Ninety-nine % of all those utilizing ChatGPT claimed some sort of value-financial savings, and 25% attested to minimizing charges by $75,000 or additional. As the scientists performed this study a mere three months soon after ChatGPT’s standard availability, present-day ChatGPT and AI device usage is without doubt larger.

Security and risk groups are already overwhelmed safeguarding their SaaS estate (which has now develop into the running process of business) from frequent vulnerabilities these as misconfigurations and over permissioned end users. This leaves little bandwidth to evaluate the AI device danger landscape, unsanctioned AI resources at present in use, and the implications for SaaS security.

Protect and backup your data using AOMEI Backupper. AOMEI Backupper takes secure and encrypted backups from your Windows, hard drives or partitions. With AOMEI Backupper you will never be worried about loosing your data anymore.

Get AOMEI Backupper with 72% discount from an authorized distrinutor of AOMEI: SerialCart® (Limited Offer).

➤ Activate Your Coupon Code

With threats emerging outside and inside corporations, CISOs and their groups should understand the most pertinent AI device threats to SaaS techniques — and how to mitigate them.

1 — Risk Actors Can Exploit Generative AI to Dupe SaaS Authentication Protocols

As ambitious staff devise methods for AI resources to enable them accomplish more with a lot less, so, much too, do cybercriminals. Employing generative AI with destructive intent is merely inescapable, and it truly is currently probable.

AI’s potential to impersonate humans exceedingly perfectly renders weak SaaS authentication protocols in particular vulnerable to hacking. According to Techopedia, danger actors can misuse generative AI for password-guessing, CAPTCHA-cracking, and setting up more powerful malware. When these strategies may well sound restricted in their attack array, the January 2023 CircleCI security breach was attributed to a single engineer’s laptop computer becoming infected with malware.

Also, a few observed technology academics not too long ago posed a plausible hypothetical for generative AI jogging a phishing attack:

“A hacker employs ChatGPT to create a personalized spear-phishing message primarily based on your company’s promoting resources and phishing messages that have been prosperous in the earlier. It succeeds in fooling people today who have been nicely educated in email consciousness, since it won’t appear like the messages they have been experienced to detect.”

Destructive actors will steer clear of the most fortified entry point — normally the SaaS platform itself — and instead focus on much more susceptible aspect doors. They will not likely hassle with the deadbolt and guard doggy situated by the entrance doorway when they can sneak all over again to the unlocked patio doors.

Relying on authentication on your own to keep SaaS information protected is not a feasible alternative. Further than applying multi-factor authentication (MFA) and actual physical security keys, security and risk groups require visibility and steady checking for the full SaaS perimeter, alongside with automatic alerts for suspicious login activity.

These insights are vital not only for cybercriminals’ generative AI activities but also for employees’ AI software connections to SaaS platforms.

2 — Personnel Link Unsanctioned AI Equipment to SaaS Platforms Without having Looking at the Pitfalls

Personnel are now relying on unsanctioned AI equipment to make their work opportunities easier. Immediately after all, who needs to do the job harder when AI instruments improve success and effectiveness? Like any type of shadow IT, worker adoption of AI resources is driven by the very best intentions.

For case in point, an employee is persuaded they could regulate their time and to-do’s much better, but the exertion to keep track of and evaluate their process administration and conferences involvement feels like a large endeavor. AI can complete that monitoring and assessment with relieve and deliver tips virtually instantly, supplying the staff the productivity enhance they crave in a fraction of the time. Signing up for an AI scheduling assistant, from the conclude-user’s point of view, is as very simple and (seemingly) innocuous as:

- Registering for a free trial or enrolling with a credit score card

- Agreeing to the AI tool’s Study/Compose authorization requests

- Connecting the AI scheduling assistant to their corporate Gmail, Google Generate, and Slack accounts

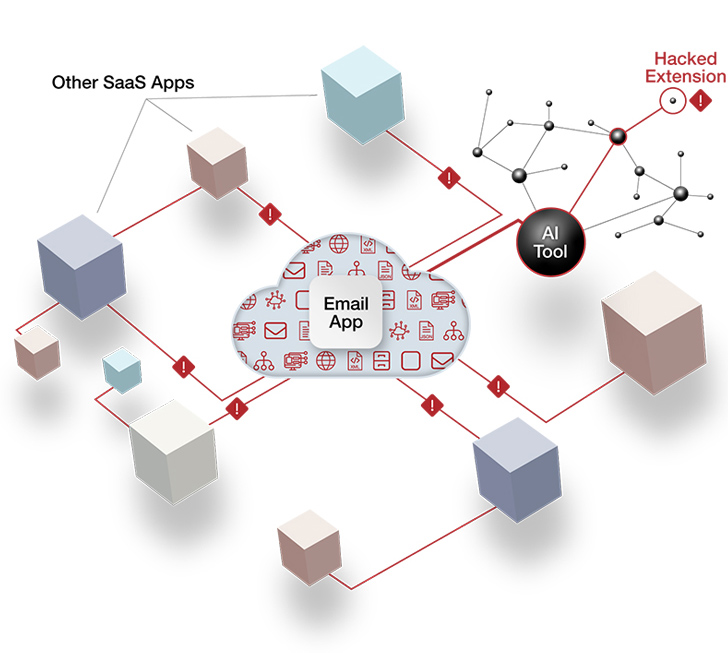

This system, nevertheless, creates invisible conduits to an organization’s most sensitive info. These AI-to-SaaS connections inherit the user’s authorization configurations, letting the hacker who can effectively compromise the AI instrument to shift quietly and laterally throughout the approved SaaS methods. A hacker can access and exfiltrate facts right until suspicious activity is recognized and acted on, which can range from months to yrs.

AI equipment, like most SaaS apps, use OAuth obtain tokens for ongoing connections to SaaS platforms. When the authorization is full, the token for the AI scheduling assistant will maintain consistent, API-dependent communicationwith Gmail, Google Drive, and Slack accounts — all with no requiring the user to log in or authenticate at any frequent intervals. The menace actor who can capitalize on this OAuth token has stumbled on the SaaS equal of spare keys “hidden” underneath the doormat.

Determine 1: An illustration of an AI tool developing an OAuth token relationship with a main SaaS system. Credit: AppOmni

Determine 1: An illustration of an AI tool developing an OAuth token relationship with a main SaaS system. Credit: AppOmni

Security and risk groups normally lack the SaaS security tooling to keep track of or regulate such an attack surface area risk. Legacy resources like cloud entry security brokers (CASBs) and secure web gateways (SWGs) will not likely detect or notify on AI-to-SaaS connectivity.

Still these AI-to-SaaS connections are not the only signifies by which personnel can unintentionally expose delicate knowledge to the outside planet.

3 — Sensitive Data Shared with Generative AI Tools Is Susceptible to Leaks

The data personnel post to generative AI resources — usually with the objective of expediting work and enhancing its high-quality — can stop up in the fingers of the AI company itself, an organization’s competitors, or the basic public.

Because most generative AI applications are totally free and exist exterior the organization’s tech stack, security and risk specialists have no oversight or security controls for these applications. This is a expanding worry among the enterprises, and generative AI data leaks have already transpired.

A March incident inadvertently enabled ChatGPT consumers to see other users’ chat titles and histories in the website’s sidebar. Problem arose not just for delicate organizational details leaks but also for person identities staying disclosed and compromised. OpenAI, the builders of ChatGPT, introduced the potential for users to turn off chat heritage. In idea, this alternative stops ChatGPT from sending knowledge back to OpenAI for products advancement, but it involves staff members to deal with details retention options. Even with this location enabled, OpenAI retains conversations for 30 days and routines the right to overview them “for abuse” prior to their expiration.

This bug and the data retention fine print haven’t gone unnoticed. In May possibly, Apple limited personnel from making use of ChatGPT about concerns of private details leaks. While the tech large took this stance as it builds its possess generative AI resources, it joined enterprises this kind of as Amazon, Verizon, and JPMorgan Chase in the ban. Apple also directed its developers to stay away from GitHub Co-pilot, owned by leading competitor Microsoft, for automating code.

Popular generative AI use scenarios are replete with info leak hazards. Contemplate a product supervisor who prompts ChatGPT to make the concept in a solution roadmap doc additional persuasive. That merchandise roadmap virtually certainly incorporates solution details and plans in no way supposed for community use, enable by yourself a competitor’s prying eyes. A equivalent ChatGPT bug — which an organization’s IT workforce has no skill to escalate or remediate — could consequence in critical information exposure.

Stand-on your own generative AI does not create SaaS security risk. But what is isolated today is linked tomorrow. Formidable workforce will by natural means seek to lengthen the usefulness of unsanctioned generative AI instruments by integrating them into SaaS apps. At this time, ChatGPT’s Slack integration requires more function than the normal Slack connection, but it is not an exceedingly high bar for a savvy, enthusiastic staff. The integration takes advantage of OAuth tokens specifically like the AI scheduling assistant illustration explained earlier mentioned, exposing an business to the identical hazards.

How Businesses Can Safeguard Their SaaS Environments from Sizeable AI Tool Hazards

Businesses want guardrails in location for AI software information governance, exclusively for their SaaS environments. This requires detailed SaaS security tooling and proactive cross-practical diplomacy.

Employees use unsanctioned AI instruments mainly due to limitations of the permitted tech stack. The motivation to strengthen productiveness and raise good quality is a virtue, not a vice. You will find an unmet have to have, and CISOs and their groups should really tactic staff with an perspective of collaboration compared to condemnation.

Excellent-religion conversations with leaders and conclusion-users regarding their AI resource requests are vital to making believe in and goodwill. At the very same time, CISOs ought to convey genuine security issues and the potential ramifications of risky AI habits. Security leaders ought to contemplate on their own the accountants who make clear the most effective techniques to work in the tax code fairly than the IRS auditors perceived as enforcers unconcerned with everything over and above compliance. Irrespective of whether it can be putting right security options in spot for the ideal AI applications or sourcing practical alternatives, the most successful CISOs try to aid staff members maximize their efficiency.

Completely knowing and addressing the challenges of AI applications involves a comprehensive and robust SaaS security posture management (SSPM) solution. SSPM gives security and risk practitioners the insights and visibility they will need to navigate the at any time-modifying point out of SaaS risk.

To improve authentication power, security groups can use SSPM to implement MFA throughout all SaaS apps in the estate and watch for configuration drift. SSPM allows security groups and SaaS app proprietors to enforce ideal methods without having researching the intricacies of each SaaS app and AI device environment.

The skill to inventory unsanctioned and approved AI instruments related to the SaaS ecosystem will expose the most urgent hazards to look into. Continuous checking immediately alerts security and risk groups when new AI connections are recognized. This visibility performs a significant purpose in lowering the attack surface area and having action when an unsanctioned, unsecure, and/or more than permissioned AI tool surfaces in the SaaS ecosystem.

AI software reliance will pretty much unquestionably carry on to distribute swiftly. Outright bans are by no means foolproof. Instead, a pragmatic mix of security leaders sharing their peers’ purpose to enhance efficiency and cut down repetitive jobs coupled with the proper SSPM answer is the very best technique to significantly reducing down SaaS data publicity or breach risk.

Located this post exciting? Comply with us on Twitter and LinkedIn to read through a lot more unique material we article.

Some elements of this posting are sourced from:

thehackernews.com

Microsoft Warns of Widescale Credential Stealing Attacks by Russian Hackers

Microsoft Warns of Widescale Credential Stealing Attacks by Russian Hackers