Selected on the web dangers to children are on the rise, in accordance to a new report from Thorn, a technology nonprofit whose mission is to establish technology to protect little ones from sexual abuse. Exploration shared in the Emerging On the web Developments in Kid Sexual Abuse 2023 report, signifies that minors are ever more having and sharing sexual photographs of by themselves. This activity may well come about consensually or coercively, as youth also report an improve in dangerous on the net interactions with grown ups.

“In our digitally connected globe, kid sexual abuse product is quickly and progressively shared on the platforms we use in our daily life,” explained John Starr, VP of Strategic Impression at Thorn. “Unsafe interactions involving youth and grownups are not isolated to the dark corners of the web. As quick as the electronic group builds impressive platforms, predators are co-opting these areas to exploit young children and share this egregious articles.”

These tendencies and many others shared in the Rising On the internet Tendencies report align with what other boy or girl safety organizations are reporting. The Nationwide Centre for Lacking and Exploited Children (NCMEC) ‘s CyberTipline has found a 329% boost in boy or girl sexual abuse substance (CSAM) information noted in the previous five years. In 2022 by yourself, NCMEC gained additional than 88.3 million CSAM information.

Protect your privacy by Mullvad VPN. Mullvad VPN is one of the famous brands in the security and privacy world. With Mullvad VPN you will not even be asked for your email address. No log policy, no data from you will be saved. Get your license key now from the official distributor of Mullvad with discount: SerialCart® (Limited Offer).

➤ Get Mullvad VPN with 12% Discount

Numerous aspects might be contributing to the raise in reports:

This information is a likely risk for each individual system that hosts consumer-created content—whether a profile picture or expansive cloud storage room.

Only technology can tackle the scale of this issue

Hashing and matching is 1 of the most important items of technology that tech companies can use to assist maintain people and platforms secured from the challenges of hosting this written content even though also serving to to disrupt the viral unfold of CSAM and the cycles of revictimization.

Tens of millions of CSAM documents are shared on-line every calendar year. A massive part of these documents are of earlier reported and confirmed CSAM. Simply because the content material is recognized and has been beforehand included to an NGO hash listing, it can be detected making use of hashing and matching.

What is hashing and matching?

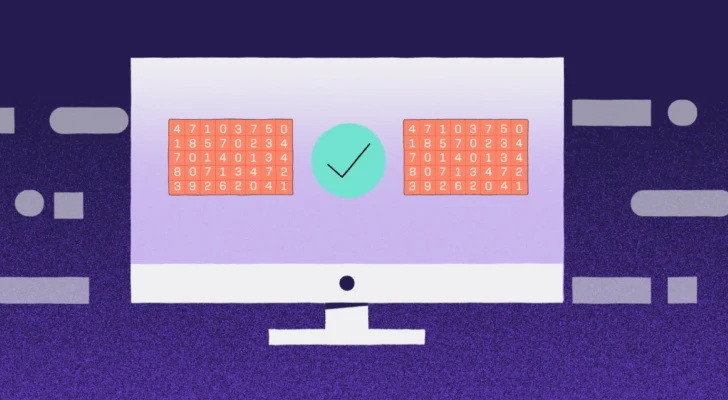

Put just, hashing and matching is a programmatic way to detect CSAM and disrupt its distribute on the web. Two forms of hashing are typically made use of: perceptual and cryptographic hashing. Both of those systems transform a file into a one of a kind string of quantities known as a hash benefit. It truly is like a digital fingerprint for each individual piece of information.

To detect CSAM, articles is hashed, and the ensuing hash values are compared from hash lists of known CSAM. This methodology makes it possible for tech providers to identify, block, or take out this illicit articles from their platforms.

Growing the corpus of recognised CSAM

Hashing and matching is the basis of CSAM detection. Because it relies upon matching from hash lists of earlier claimed and verified material, the range of recognized CSAM hash values in the databases that a corporation matches against is critical.

Safer, a software for proactive CSAM detection crafted by Thorn, delivers accessibility to a big databases aggregating 29+ million recognized CSAM hash values. Safer also permits technology organizations to share hash lists with each individual other (either named or anonymously), further expanding the corpus of recognized CSAM, which aids to disrupt its viral unfold.

Removing CSAM from the internet

To eliminate CSAM from the internet, tech businesses and NGOs just about every have a role to perform. “Information-hosting platforms are essential companions, and Thorn is fully commited to empowering the tech field with resources and resources to overcome kid sexual abuse at scale,” Starr added. “This is about safeguarding our young children. It’s also about encouraging tech platforms protect their customers and them selves from the risks of hosting this material. With the proper instruments, the internet can be safer.”

In 2022, Safer hashed much more than 42.1 billion visuals and films for their shoppers. That resulted in 520,000 data files of known CSAM becoming located on their platforms. To date, Safer has served its buyers discover a lot more than two million items of CSAM on their platforms.

The extra platforms that benefit from CSAM detection instruments, the superior likelihood there is that the alarming increase of boy or girl sexual abuse product on the internet can be reversed.

Discovered this posting intriguing? Follow us on Twitter and LinkedIn to study a lot more special written content we put up.

Some components of this short article are sourced from:

thehackernews.com

Researchers Uncover Grayling APT’s Ongoing Attack Campaign Across Industries

Researchers Uncover Grayling APT’s Ongoing Attack Campaign Across Industries