The U.S. National Institute of Expectations and Technology (NIST) is contacting notice to the privacy and security problems that arise as a consequence of enhanced deployment of artificial intelligence (AI) methods in latest yrs.

“These security and privacy worries involve the opportunity for adversarial manipulation of instruction details, adversarial exploitation of product vulnerabilities to adversely influence the efficiency of the AI program, and even destructive manipulations, modifications or mere conversation with styles to exfiltrate delicate information and facts about individuals represented in the facts, about the product by itself, or proprietary company details,” NIST mentioned.

As AI devices come to be built-in into on the web providers at a immediate rate, in component driven by the emergence of generative AI methods like OpenAI ChatGPT and Google Bard, types powering these systems confront a range of threats at many phases of the device studying functions.

Protect and backup your data using AOMEI Backupper. AOMEI Backupper takes secure and encrypted backups from your Windows, hard drives or partitions. With AOMEI Backupper you will never be worried about loosing your data anymore.

Get AOMEI Backupper with 72% discount from an authorized distrinutor of AOMEI: SerialCart® (Limited Offer).

➤ Activate Your Coupon Code

These incorporate corrupted schooling details, security flaws in the program parts, info model poisoning, provide chain weaknesses, and privacy breaches arising as a result of prompt injection attacks.

“For the most section, computer software developers want much more people today to use their merchandise so it can get better with publicity,” NIST pc scientist Apostol Vassilev mentioned. “But there is no guarantee the publicity will be good. A chatbot can spew out negative or toxic information when prompted with cautiously intended language.”

The attacks, which can have important impacts on availability, integrity, and privacy, are broadly categorised as follows –

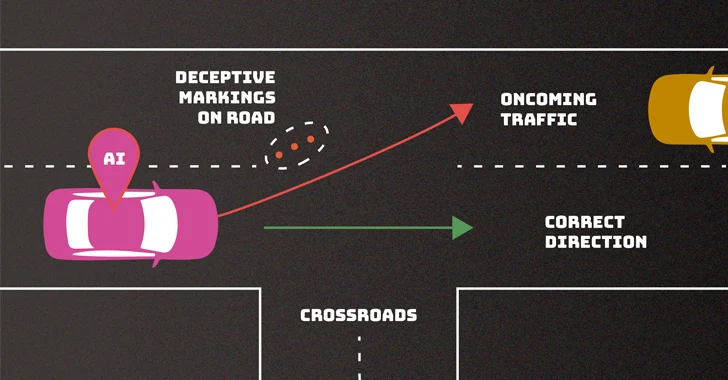

- Evasion attacks, which purpose to crank out adversarial output after a product is deployed

- Poisoning attacks, which target the teaching phase of the algorithm by introducing corrupted data

- Privacy attacks, which intention to glean sensitive data about the program or the details it was skilled on by posing thoughts that circumvent existing guardrails

- Abuse attacks, which intention to compromise reputable resources of details, these as a web site with incorrect parts of facts, to repurpose the system’s meant use

These types of attacks, NIST stated, can be carried out by danger actors with complete understanding (white-box), minimal knowledge (black-box), or have a partial comprehending of some of the facets of the AI procedure (gray-box).

The company more mentioned the absence of robust mitigation steps to counter these dangers, urging the broader tech group to “come up with far better defenses.”

The advancement comes additional than a month following the U.K., the U.S., and international associates from 16 other international locations introduced guidelines for the progress of secure synthetic intelligence (AI) devices.

“In spite of the significant progress AI and device mastering have designed, these systems are vulnerable to attacks that can cause stunning failures with dire repercussions,” Vassilev reported. “There are theoretical challenges with securing AI algorithms that just have not been solved but. If any one says differently, they are marketing snake oil.”

Located this report attention-grabbing? Comply with us on Twitter and LinkedIn to go through far more exclusive information we publish.

Some areas of this article are sourced from:

thehackernews.com

DoJ Charges 19 Worldwide in $68 Million xDedic Dark Web Marketplace Fraud

DoJ Charges 19 Worldwide in $68 Million xDedic Dark Web Marketplace Fraud