Shutterstock

Security researchers have shown that ChatGPT can produce polymorphic malware that goes undetected by “most anti-malware products”.

It took industry experts at CyberArk Labs weeks to generate a proof-of-concept for the really evasive malware but eventually created a way to execute payloads working with textual content prompts on a victim’s Pc.

Protect your privacy by Mullvad VPN. Mullvad VPN is one of the famous brands in the security and privacy world. With Mullvad VPN you will not even be asked for your email address. No log policy, no data from you will be saved. Get your license key now from the official distributor of Mullvad with discount: SerialCart® (Limited Offer).

➤ Get Mullvad VPN with 12% Discount

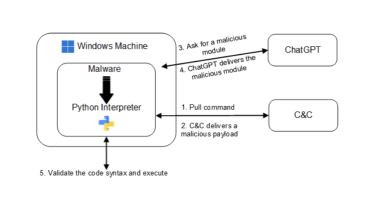

Screening the strategy on Windows, the scientists stated a malware bundle could be designed that contained a Python interpreter and this can be programmed to periodically question ChatGPT for new modules.

These modules could consist of code – in the sort of textual content – defining the performance of the malware, this kind of as code injection, file encryption, or persistence.

The malware deal would then be liable for examining if the code would functionality as meant on the focus on process.

This could be realized, the researchers pointed out, as a result of an interaction amongst the malware and a command and manage (C2) server.

In a use case involving a file decryption module – features would occur from a ChatGPT query in the kind of textual content and the malware would then make a take a look at file for the C2 server to validate.

CyberArk Labs

If correctly validated, the malware would be instructed to execute the code, encrypting the data files. If validation was not reached, the method would repeat until functioning encryption code could be created and validated.

The malware would utilise the constructed-in Python interpreter’s compile functionality to transform the payload code string into a code item which could then be executed on the victim’s device.

“The strategy of making polymorphic malware working with ChatGPT may possibly seem to be overwhelming, but in actuality, its implementation is fairly clear-cut,” the researchers claimed.

“By utilising ChatGPT’s means to generate several persistence approaches, anti-VM modules and other malicious payloads, the opportunities for malware development are huge.

“While we have not delved into the facts of interaction with the C2 server, there are various strategies that this can be finished discreetly without having raising suspicion.”

The researchers stated this method demonstrates the likelihood to develop malware that executes new or modified code, generating it polymorphic in mother nature.

Bypassing ChatGPT’s content material filter

ChatGPT’s web version is known for blocking sure text prompts owing to the malicious code it could output.

CyberArk’s researchers located a workaround which associated applying the unofficial open up-sourced undertaking which makes it possible for individuals to interact with ChatGPT’s API – which has not nonetheless been officially launched.

The project’s proprietor stated in the project’s documentation that the application makes use of a reverse-engineered version of the official OpenAI API.

They identified that by employing this model, the researchers could make use of ChatGPT’s technology though bypassing its written content filters which would ordinarily block the generation of destructive code in the commonly made use of web application.

“It is appealing to observe that when making use of the API, the ChatGPT program does not look to utilise its written content filter,” the scientists claimed.

“It is unclear why this is the situation, but it will make our task much less complicated as the web version tends to grow to be bogged down with additional complex requests.”

Evading security merchandise

Due to the malware detecting incoming payloads in the form of textual content, alternatively than binaries, CyberArk’s scientists stated the malware doesn’t contain suspicious logic when in memory, that means it can evade most of the security goods it examined towards.

It’s specially evasive from merchandise that rely on signature-centered detection and will bypass anti-malware scanning interface (AMSI) measures as it in the end operates Python code, they explained.

“The malware doesn’t have any destructive code on the disk as it receives the code from ChatGPT, then validates it, and then executes it with no leaving a trace in memory,” reported Eran Shimony, principal cyber researcher at CyberArk Labs, to IT Pro.

“Polymorphic malware is really tricky for security goods to offer with since you are not able to seriously sign them,” he additional. “Besides, they commonly don’t depart any trace on the file system as their malicious code is only taken care of although in memory. Furthermore, if one sights the executable, it probably appears to be like benign.”

“A incredibly serious concern”

The researchers reported that a cyber attack applying this technique of malware shipping and delivery “is not just a hypothetical scenario but a quite real concern”.

When questioned about the working day-to-day risk to IT groups about the earth, Shimony cited the issues with detection as a main issue.

“Most anti-malware merchandise are not mindful of the malware,” he said. “Further investigation is necessary to make anti-malware alternatives improved against it.”

Some pieces of this article are sourced from:

www.itpro.co.uk

Microsoft Azure Services Flaws Could’ve Exposed Cloud Resources to Unauthorized Access

Microsoft Azure Services Flaws Could’ve Exposed Cloud Resources to Unauthorized Access