Cybersecurity scientists have found out a novel attack that employs stolen cloud qualifications to focus on cloud-hosted large language design (LLM) providers with the goal of selling accessibility to other threat actors.

The attack method has been codenamed LLMjacking by the Sysdig Threat Investigation Team.

“As soon as original entry was attained, they exfiltrated cloud qualifications and gained accessibility to the cloud natural environment, where by they tried to accessibility local LLM products hosted by cloud companies,” security researcher Alessandro Brucato mentioned. “In this instance, a nearby Claude (v2/v3) LLM product from Anthropic was focused.”

Protect and backup your data using AOMEI Backupper. AOMEI Backupper takes secure and encrypted backups from your Windows, hard drives or partitions. With AOMEI Backupper you will never be worried about loosing your data anymore.

Get AOMEI Backupper with 72% discount from an authorized distrinutor of AOMEI: SerialCart® (Limited Offer).

➤ Activate Your Coupon Code

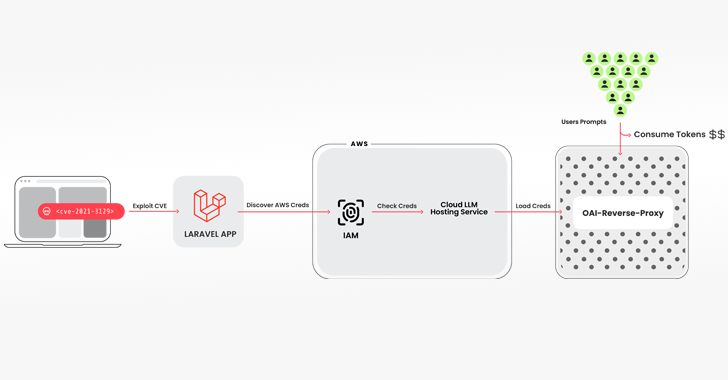

The intrusion pathway used to pull off the plan entails breaching a procedure operating a vulnerable model of the Laravel Framework (e.g., CVE-2021-3129), followed by finding maintain of Amazon Web Expert services (AWS) qualifications to entry the LLM expert services.

Among the instruments utilised is an open up-supply Python script that checks and validates keys for several offerings from Anthropic, AWS Bedrock, Google Cloud Vertex AI, Mistral, and OpenAI, among the many others.

“No legitimate LLM queries were really run during the verification section,” Brucato spelled out. “As an alternative, just sufficient was performed to determine out what the credentials were capable of and any quotas.”

The keychecker also has integration with a different open up-resource software called oai-reverse-proxy that functions as a reverse proxy server for LLM APIs, indicating that the danger actors are likely giving obtain to the compromised accounts without basically exposing the underlying qualifications.

“If the attackers had been gathering an inventory of handy qualifications and preferred to provide entry to the accessible LLM types, a reverse proxy like this could permit them to monetize their initiatives,” Brucato mentioned.

Also, the attackers have been observed querying logging settings in a most likely try to sidestep detection when working with the compromised credentials to operate their prompts.

The improvement is a departure from attacks that target on prompt injections and design poisoning, rather allowing attackers to monetize their access to the LLMs though the proprietor of the cloud account foots the monthly bill with no their knowledge or consent.

Sysdig stated that an attack of this kind could rack up over $46,000 in LLM use charges for each day for the target.

“The use of LLM products and services can be high-priced, relying on the design and the amount of tokens staying fed to it,” Brucato claimed. “By maximizing the quota limitations, attackers can also block the compromised group from utilizing models legitimately, disrupting company functions.”

Corporations are encouraged to allow specific logging and keep track of cloud logs for suspicious or unauthorized action, as effectively as assure that helpful vulnerability management processes are in location to avert initial entry.

Identified this write-up interesting? Stick to us on Twitter and LinkedIn to read through a lot more exceptional content we write-up.

Some sections of this short article are sourced from:

thehackernews.com

New TunnelVision Attack Allows Hijacking of VPN Traffic via DHCP Manipulation

New TunnelVision Attack Allows Hijacking of VPN Traffic via DHCP Manipulation