Microsoft on Monday stated it took steps to suitable a obtrusive security gaffe that led to the exposure of 38 terabytes of personal knowledge.

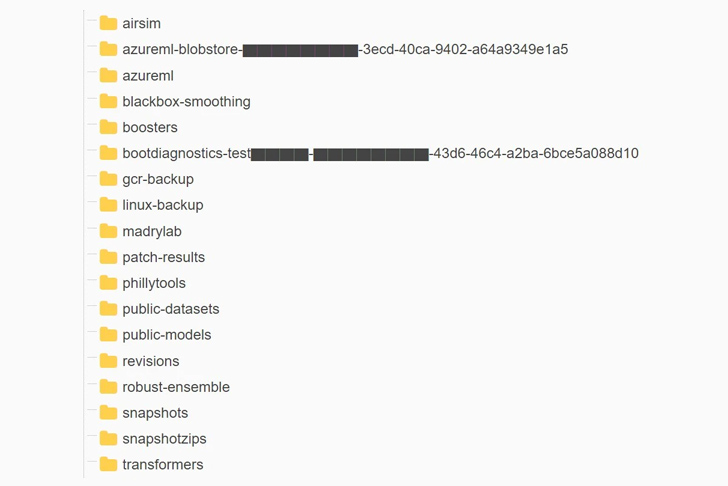

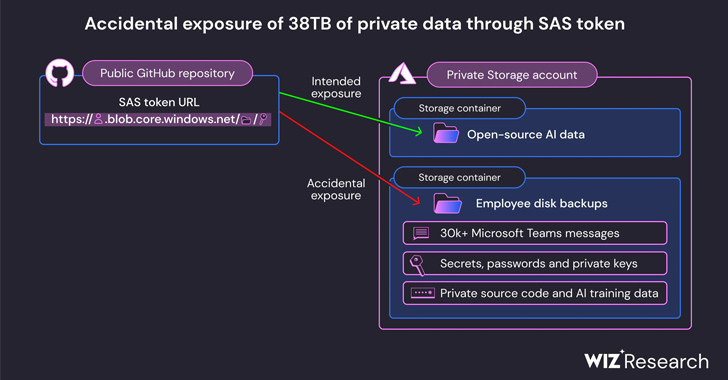

The leak was found on the company’s AI GitHub repository and is mentioned to have been inadvertently built community when publishing a bucket of open-supply schooling data, Wiz claimed. It also incorporated a disk backup of two former employees’ workstations made up of strategies, keys, passwords, and more than 30,000 inside Groups messages.

The repository, named “robust-designs-transfer,” is no more time obtainable. Prior to its takedown, it showcased supply code and device understanding styles pertaining to a 2020 investigation paper titled “Do Adversarially Strong ImageNet Designs Transfer Improved?”

Protect and backup your data using AOMEI Backupper. AOMEI Backupper takes secure and encrypted backups from your Windows, hard drives or partitions. With AOMEI Backupper you will never be worried about loosing your data anymore.

Get AOMEI Backupper with 72% discount from an authorized distrinutor of AOMEI: SerialCart® (Limited Offer).

➤ Activate Your Coupon Code

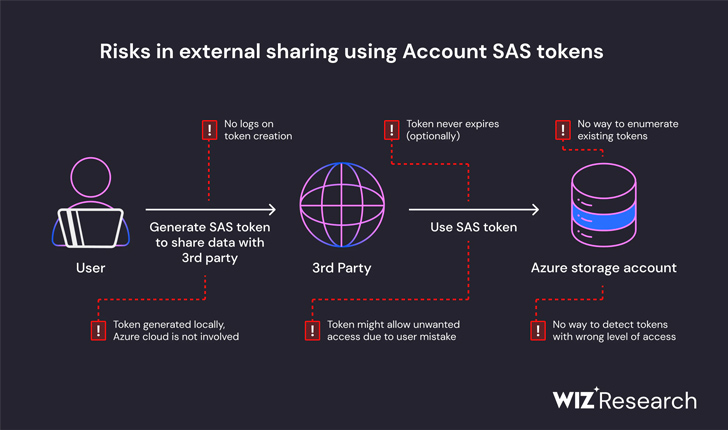

“The publicity arrived as the end result of an extremely permissive SAS token – an Azure aspect that permits people to share data in a manner that is equally hard to monitor and hard to revoke,” Wiz explained in a report. The issue was claimed to Microsoft on June 22, 2023.

Exclusively, the repository’s README.md file instructed developers to download the models from an Azure Storage URL that unintentionally also granted obtain to the full storage account, therefore exposing extra non-public knowledge.

“In addition to the extremely permissive access scope, the token was also misconfigured to enable “whole command” permissions rather of go through-only,” Wiz scientists Hillai Ben-Sasson and Ronny Greenberg claimed. “Indicating, not only could an attacker watch all the information in the storage account, but they could delete and overwrite current documents as very well.”

In reaction to the results, Microsoft said its investigation found no proof of unauthorized exposure of customer details and that “no other internal expert services have been set at risk mainly because of this issue.” It also emphasised that customers need to have not choose any motion on their aspect.

The Windows makers more observed that it revoked the SAS token and blocked all exterior accessibility to the storage account. The trouble was fixed two immediately after dependable disclosure.

To mitigate such risks heading forward, the enterprise has expanded its secret scanning provider to include things like any SAS token that may possibly have overly permissive expirations or privileges. It explained it also discovered a bug in its scanning procedure that flagged the distinct SAS URL in the repository as a bogus favourable.

“Because of to the deficiency of security and governance above Account SAS tokens, they must be regarded as delicate as the account important by itself,” the scientists mentioned. “Hence, it is highly suggested to stay clear of utilizing Account SAS for exterior sharing. Token generation blunders can conveniently go unnoticed and expose sensitive knowledge.”

Approaching WEBINARIdentity is the New Endpoint: Mastering SaaS Security in the Fashionable Age

Dive deep into the foreseeable future of SaaS security with Maor Bin, CEO of Adaptive Shield. Discover why id is the new endpoint. Protected your location now.

Supercharge Your Skills

This is not the 1st time misconfigured Azure storage accounts have come to mild. In July 2022, JUMPSEC Labs highlighted a circumstance in which a danger actor could choose advantage of these accounts to get access to an organization on-premise setting.

The development is the most current security blunder at Microsoft and comes approximately two months after the firm uncovered that hackers primarily based in China have been able to infiltrate the firm’s techniques and steal a hugely sensitive signing critical by compromising an engineer’s company account and probable accessing an crash dump of the purchaser signing technique.

“AI unlocks big likely for tech businesses. Having said that, as facts researchers and engineers race to carry new AI alternatives to output, the massive quantities of facts they manage demand extra security checks and safeguards,” Wiz CTO and co-founder Ami Luttwak stated in a assertion.

“This rising technology demands substantial sets of data to educate on. With many enhancement groups needing to manipulate huge quantities of details, share it with their friends or collaborate on community open-resource tasks, conditions like Microsoft’s are more and more tough to keep track of and stay clear of.”

Located this posting attention-grabbing? Comply with us on Twitter and LinkedIn to read through more exclusive articles we post.

Some areas of this posting are sourced from:

thehackernews.com

New AMBERSQUID Cryptojacking Operation Targets Uncommon AWS Services

New AMBERSQUID Cryptojacking Operation Targets Uncommon AWS Services